Physically-Based Rendering Engine | 3D Graphics

Programmer (Hobby Project)

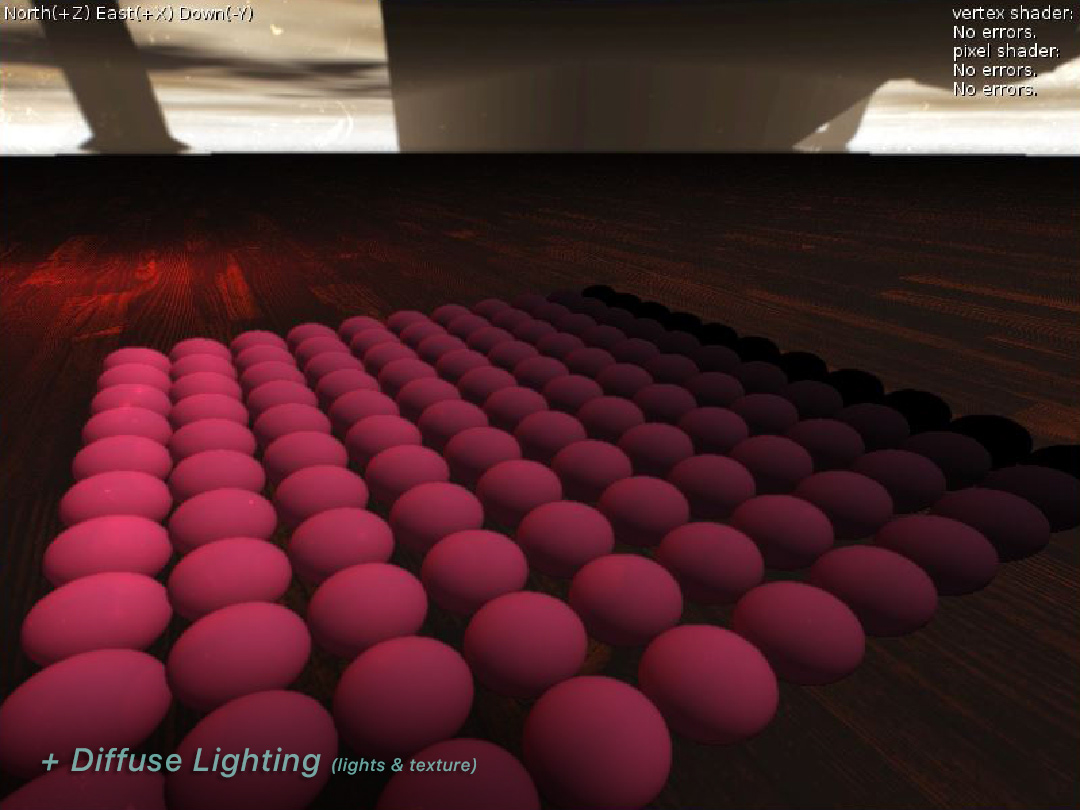

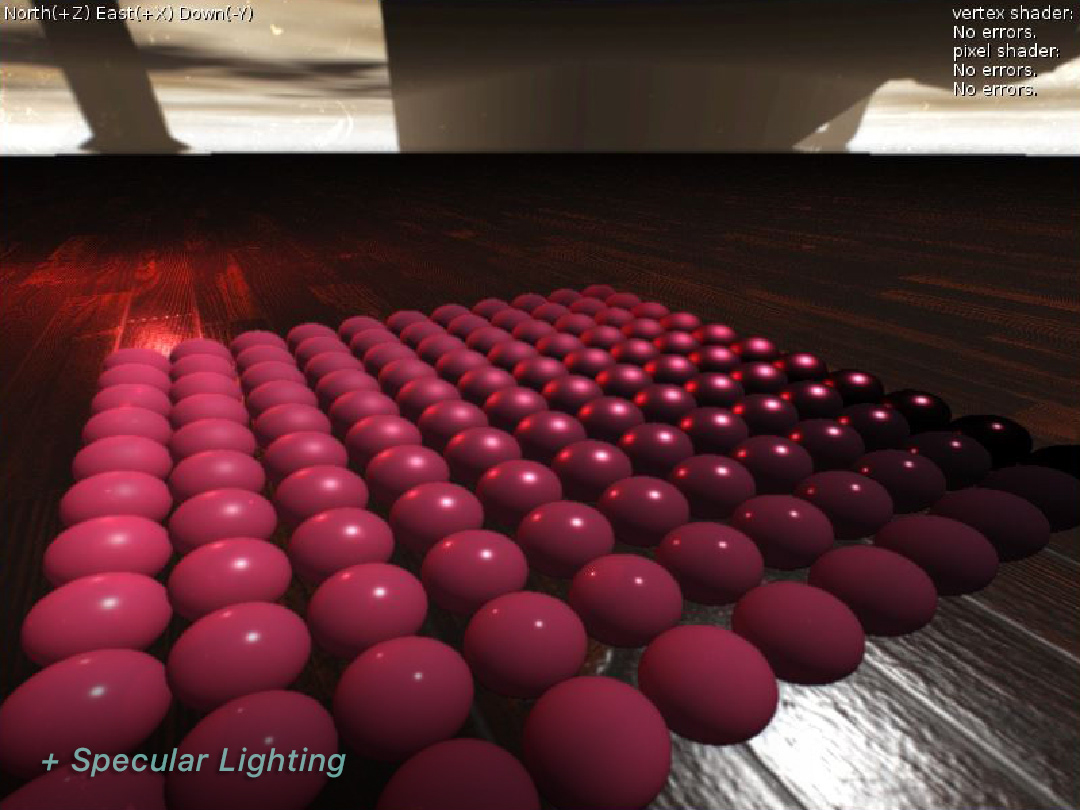

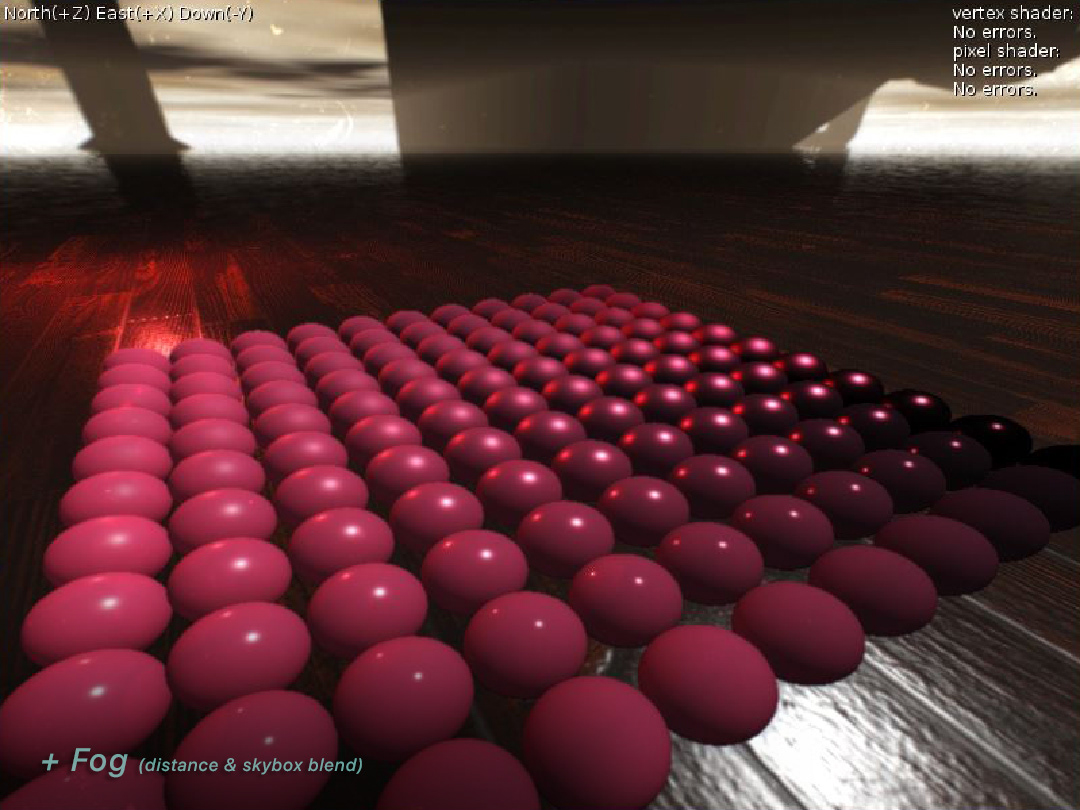

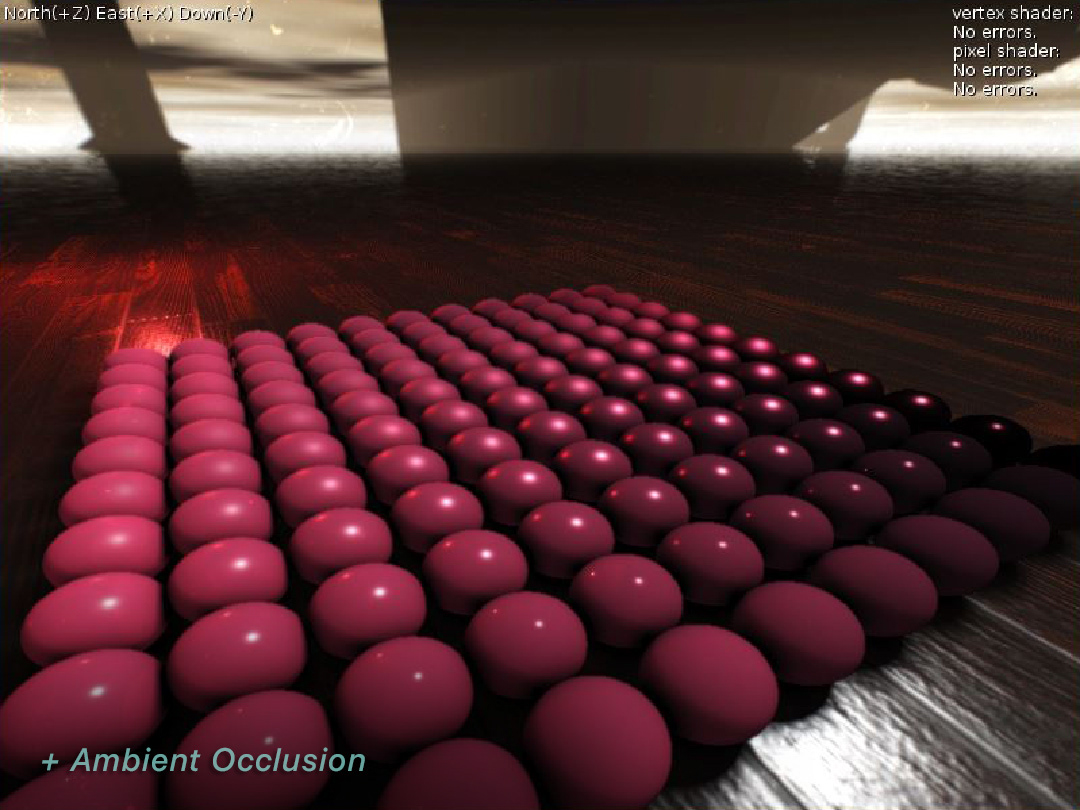

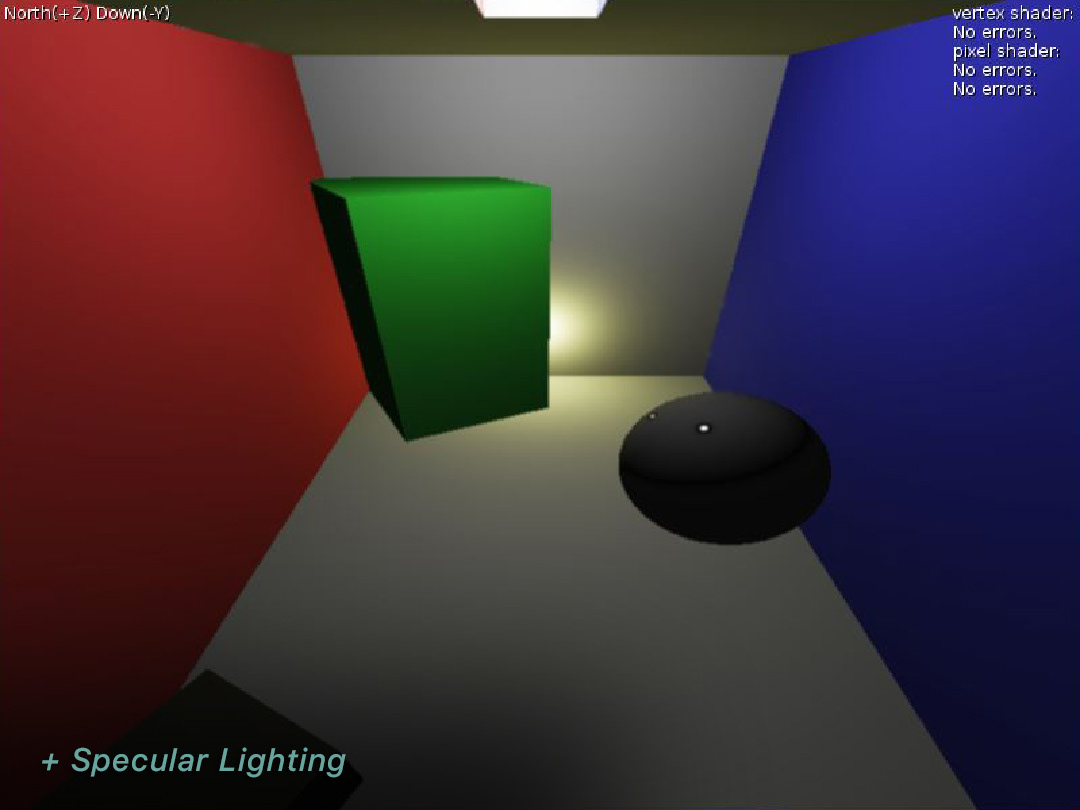

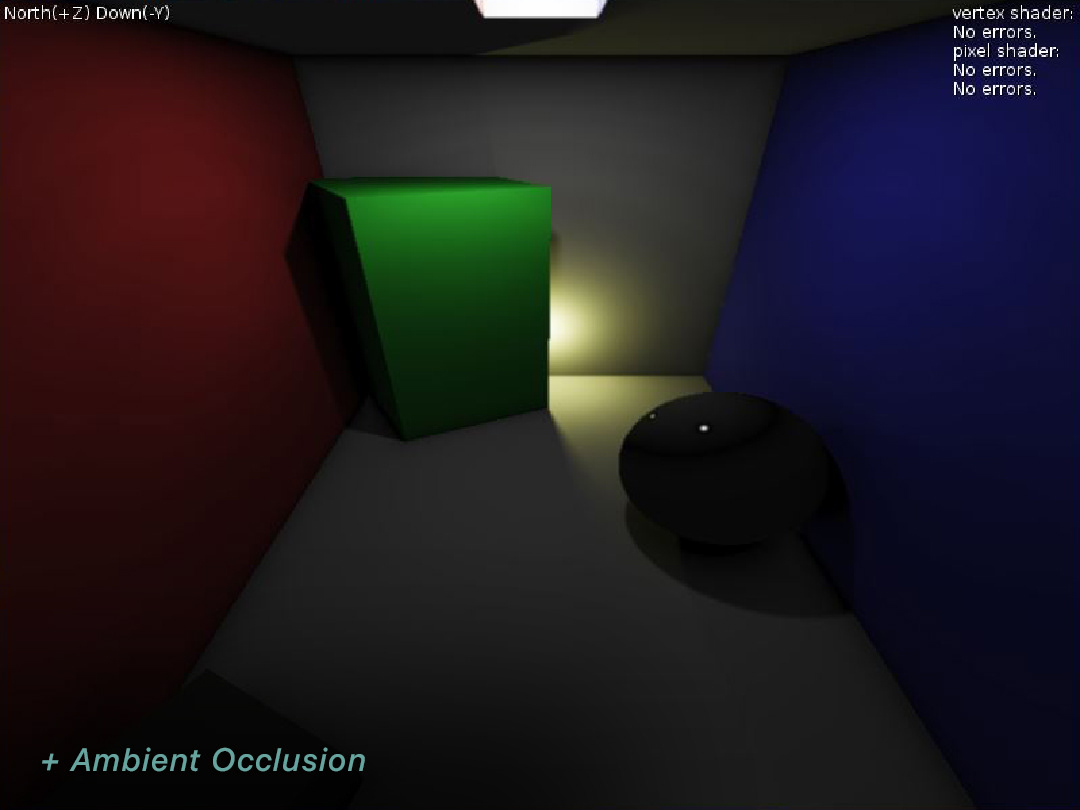

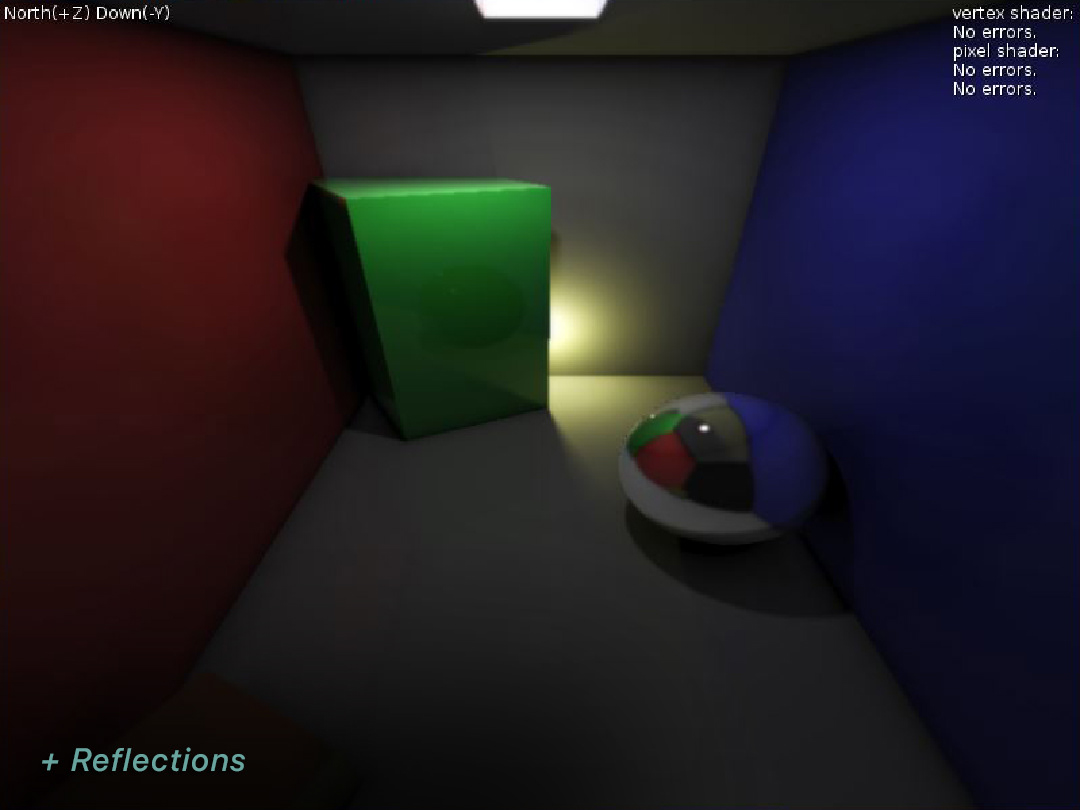

Developed a custom physically-based rendering (PBR) engine using ray-marching techniques on the GPU. This technique uses what is called a "signed-distance field" (aka SDF) to represent the 3D world that is being rendered. Rays are casted out into the world from screen pixels translated to the camera plane (using a semi-accurate mathematical model for the lens which includes adjustable FOV) and "marched" along their trajectories. At each stop the ray makes, the ray "tests" its location by plugging its coordinates into the SDF distance estimator (aka DE) function. The test results returned from the DE is a floating point number that tells how far away that ray point is from the nearest object. This info is then used to push the ray forward using that same distance (because it usually guarantees that it won't hit anything over that distance) unless the ray is close enough to a nearby object. If the ray is close enough then it's considered colliding with that object and color/texture/lighting information if calculated from that spot and object attributes. If a ray goes on for too long or too far, it is terminated and skybox/atmospheric info is calculated instead.

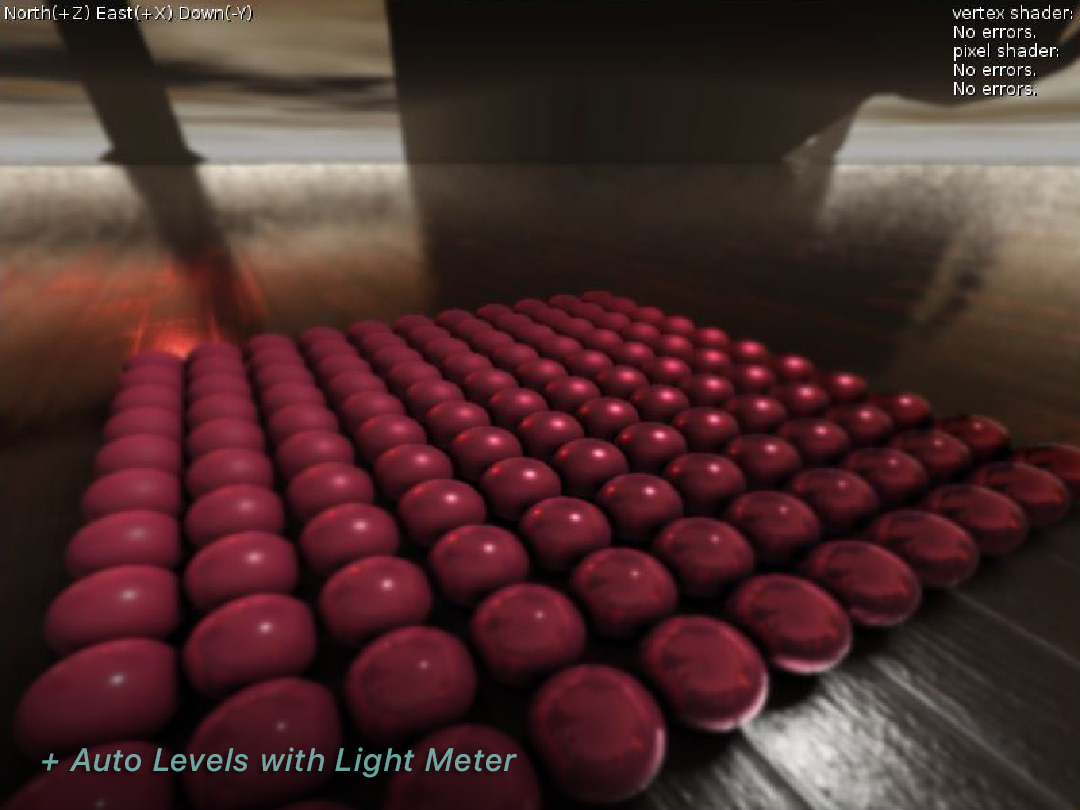

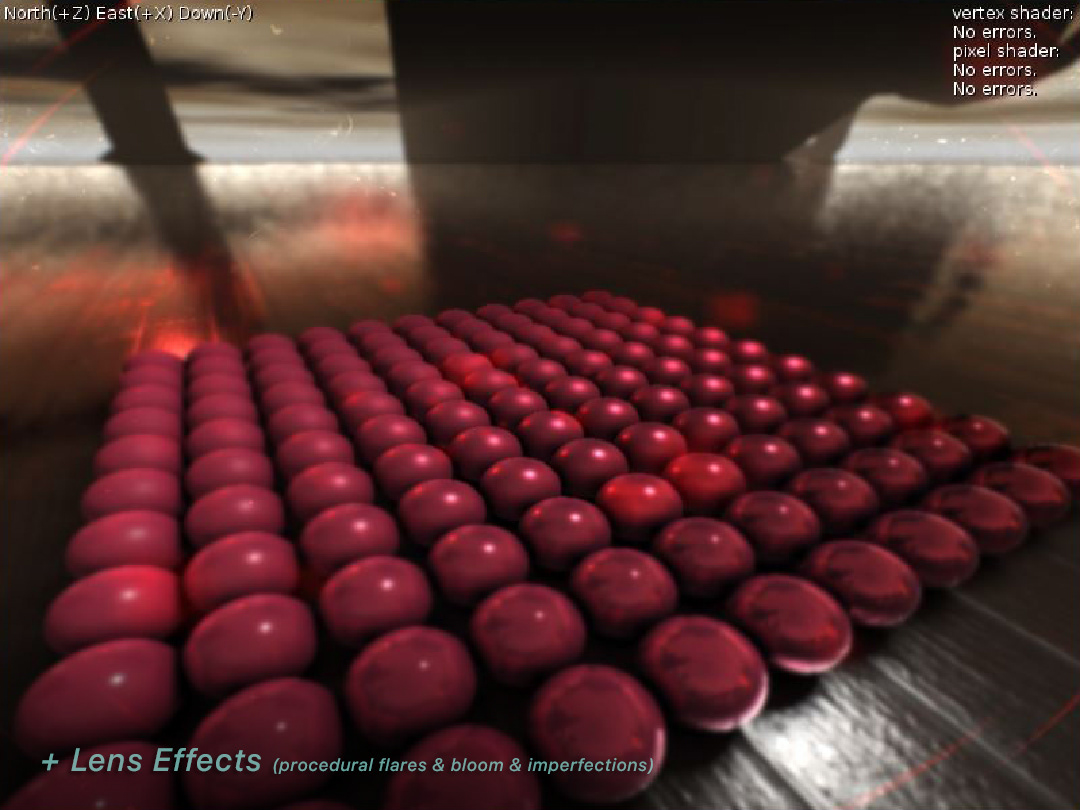

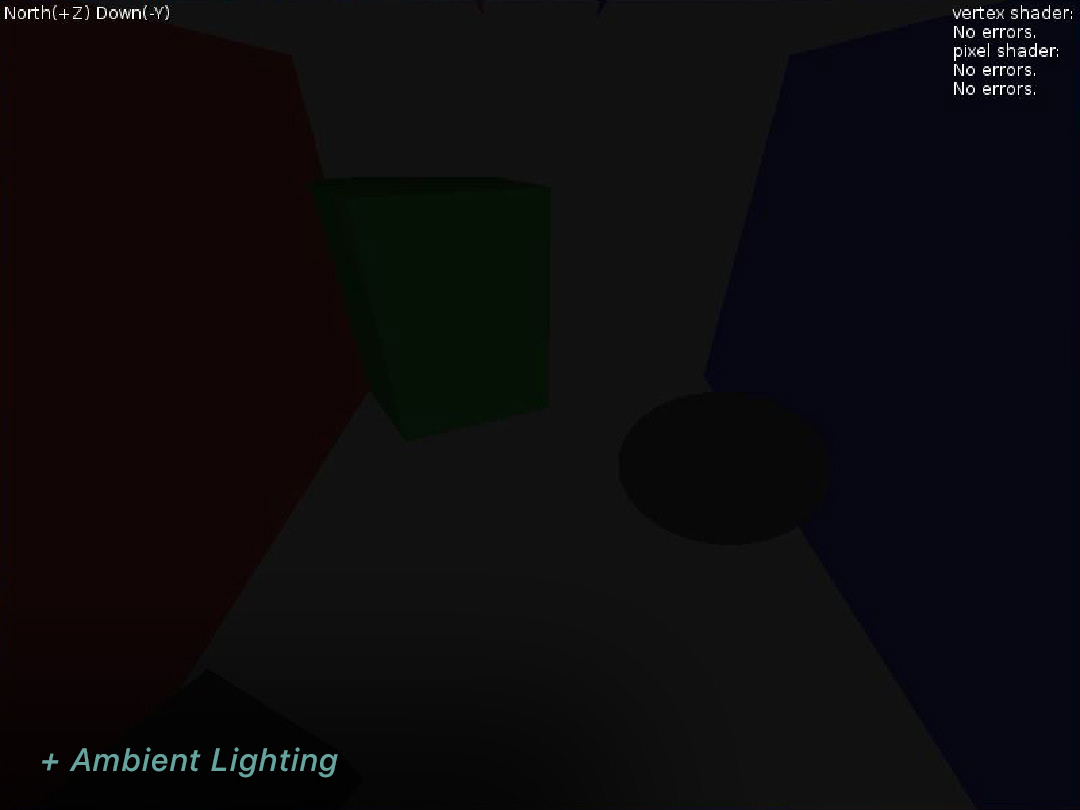

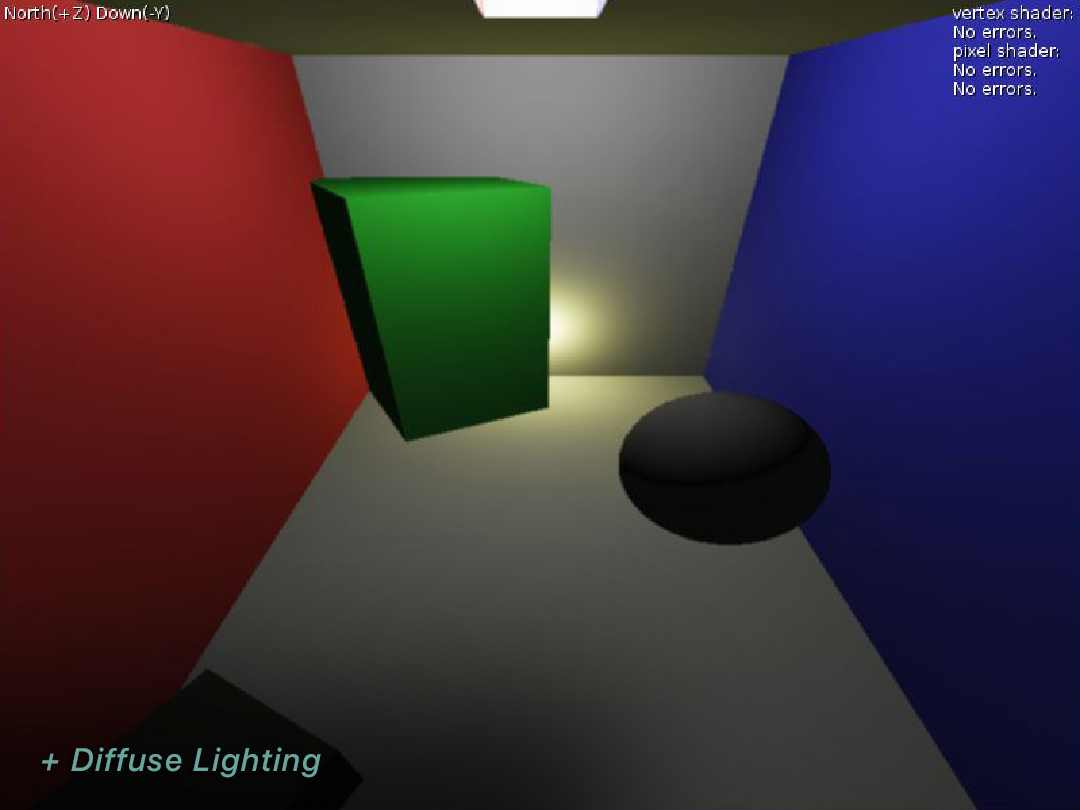

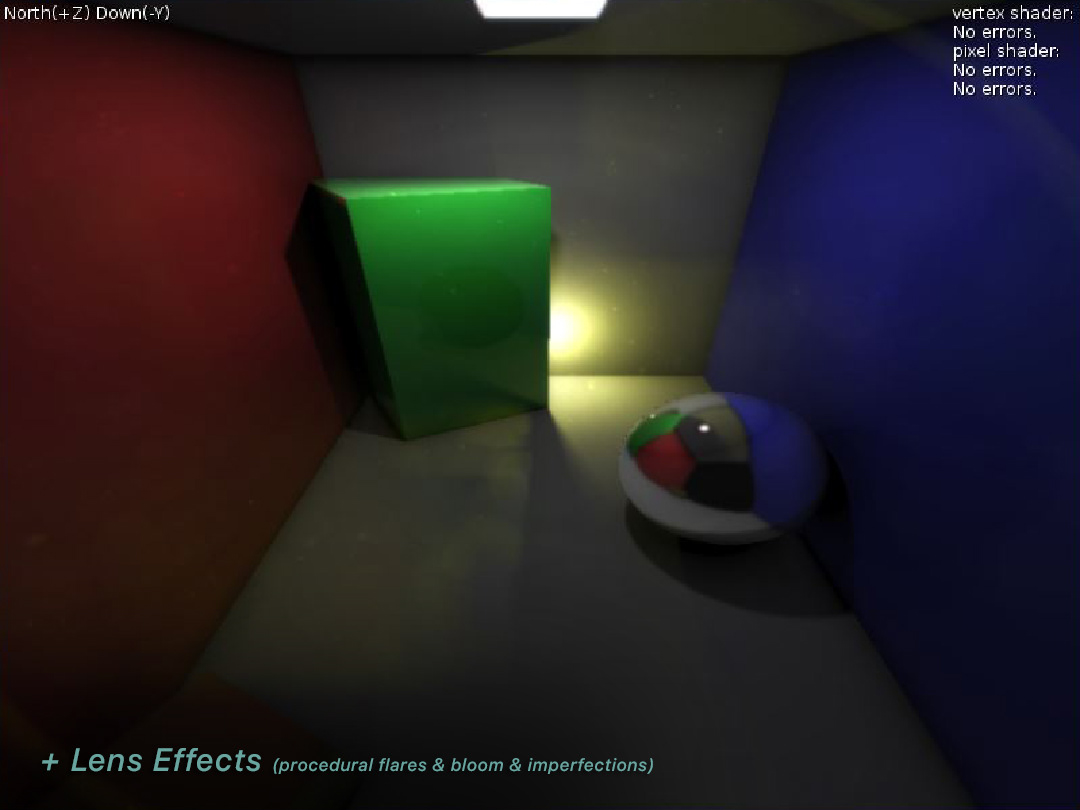

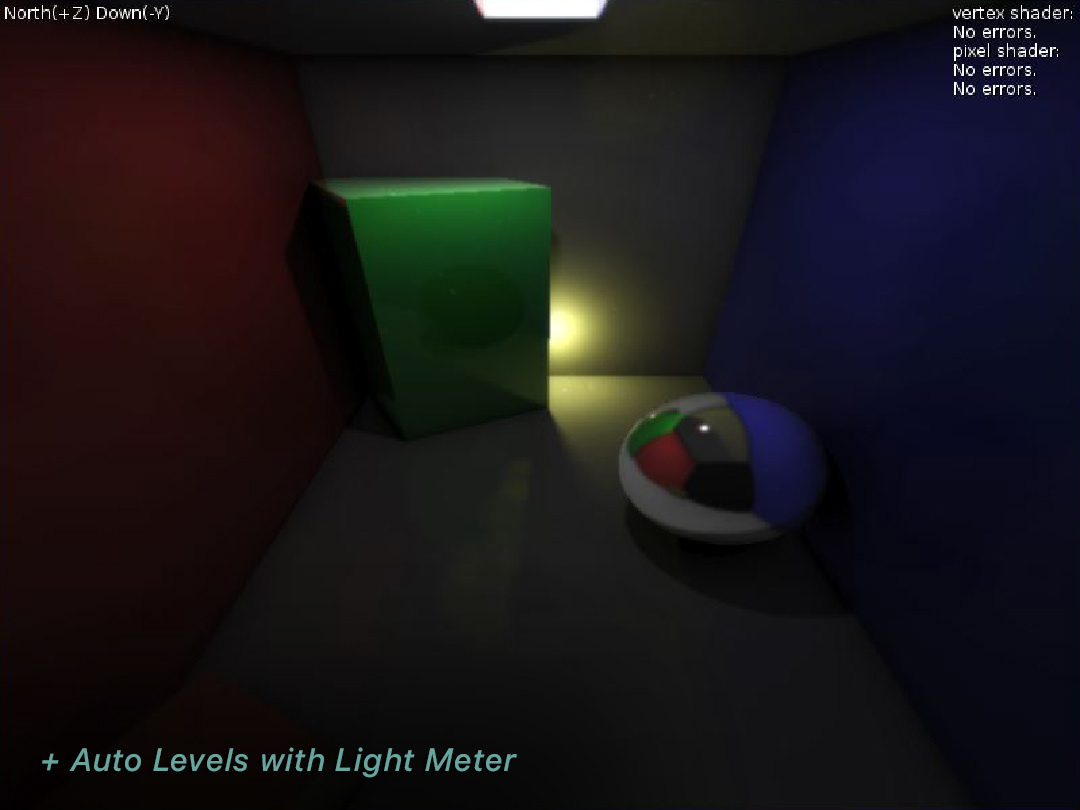

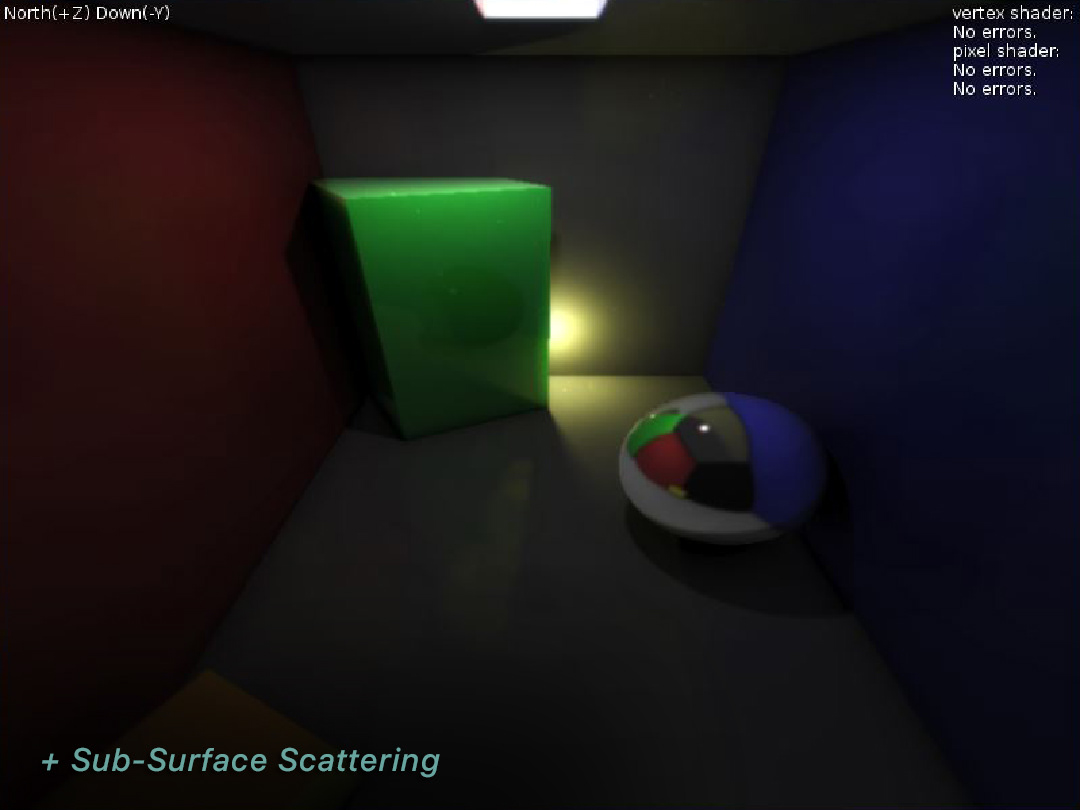

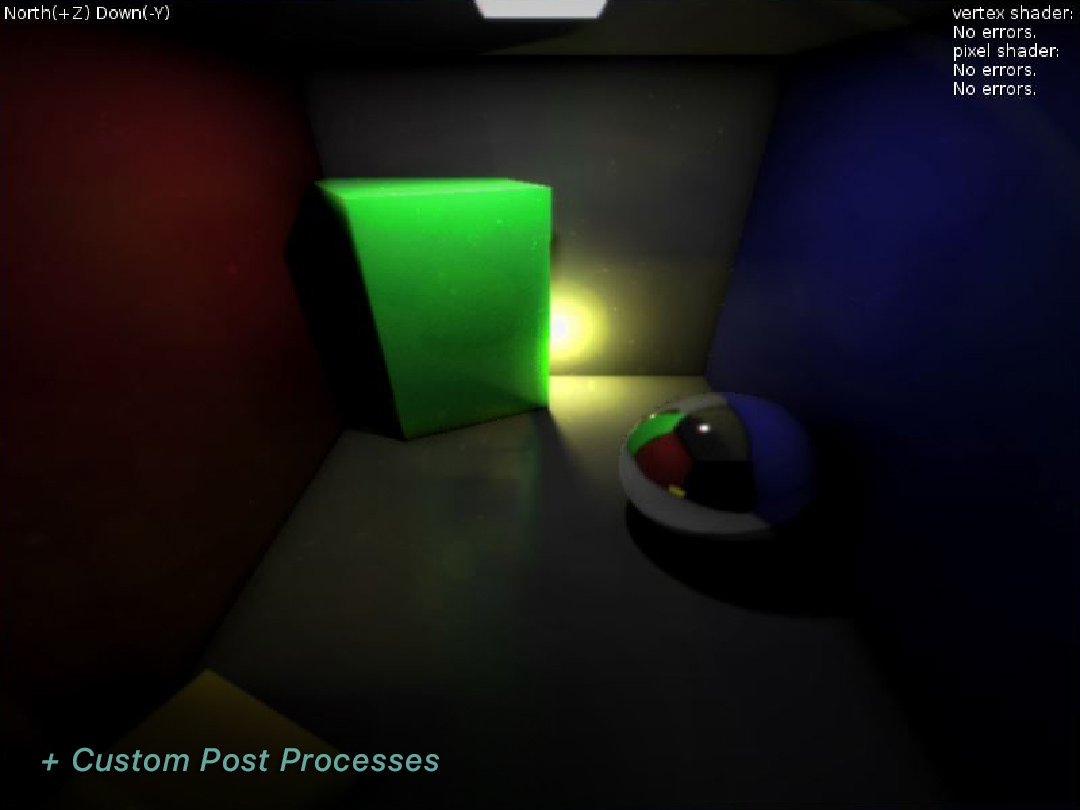

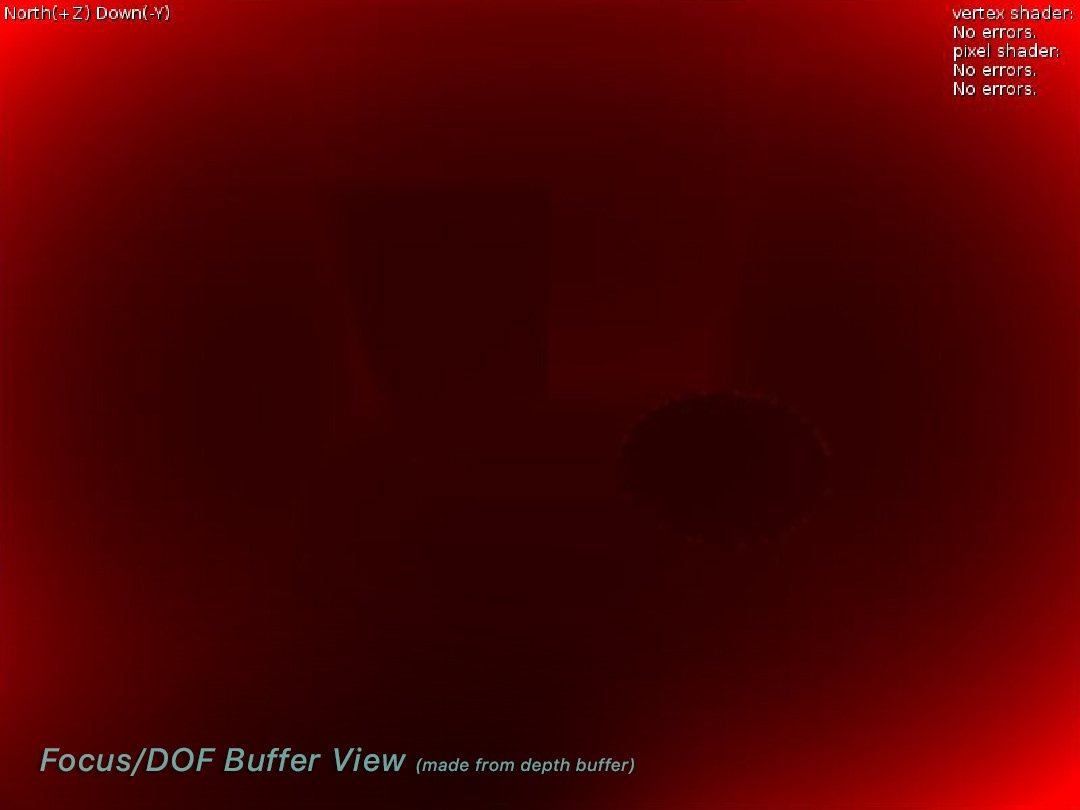

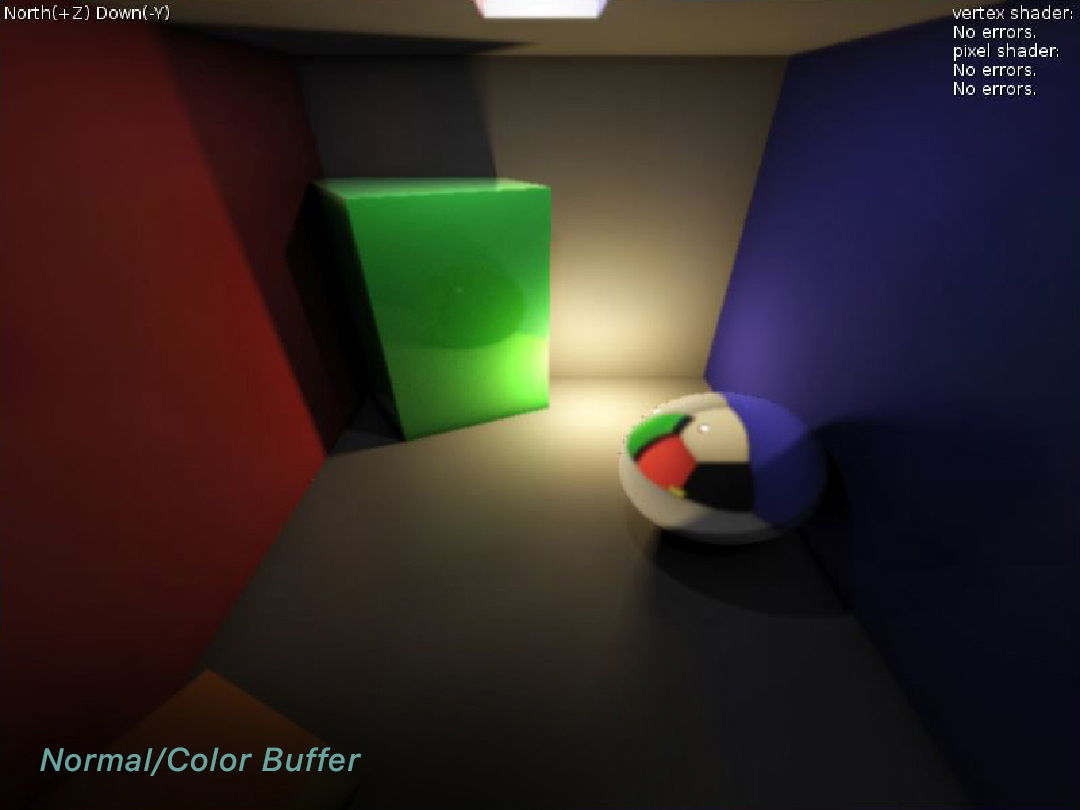

When calculating the color/texture/lighting information at a given collision point, a realistic approximation function is used to achieve PBR results. This function is called the bidirectional reflectance distribution function (BRDF) and has been pioneered by companies like Disney to try and match computer graphics with what we see in reality (but efficiently of course). This function takes all the information of the ray in question as well as the collision objects attributes to output lighting values that, when mixed with the attributes of all lights in the scene effecting said object, creates the final output color. This color is applied to the pixel that the ray was tied to. Various other data is used to simulate other aspects of light such as the roughness of a texture/surface scattering light in different directions (aka normal/roughness map). Post processes are also used to perform further rendering like depth of field blurring, lens flares, and even custom post fx like outlining objects.

This whole process is a very efficient solution to the problems surrounding raycasting (particularly in 3D). The SDF allows rays to skip allow great distances in their path saving time/iterations. The BRDF makes calculating realistic lighting values quick and practical. Post processes are even efficiently added on after initial rendering has been performed (using deferred rendering techniques). All rendering is done on the GPU and in the LOVE2D (Lua) programming environment/engine.

Overview of the Rendering Process

Another Scene for Example

The Many Buffers

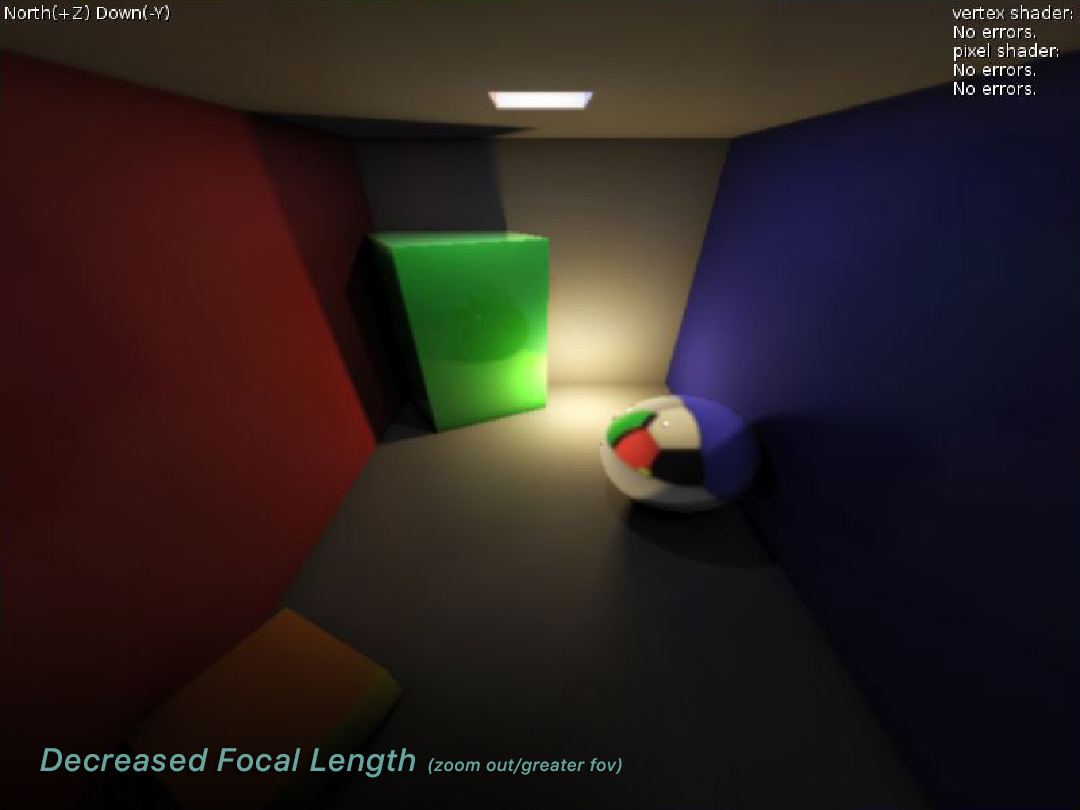

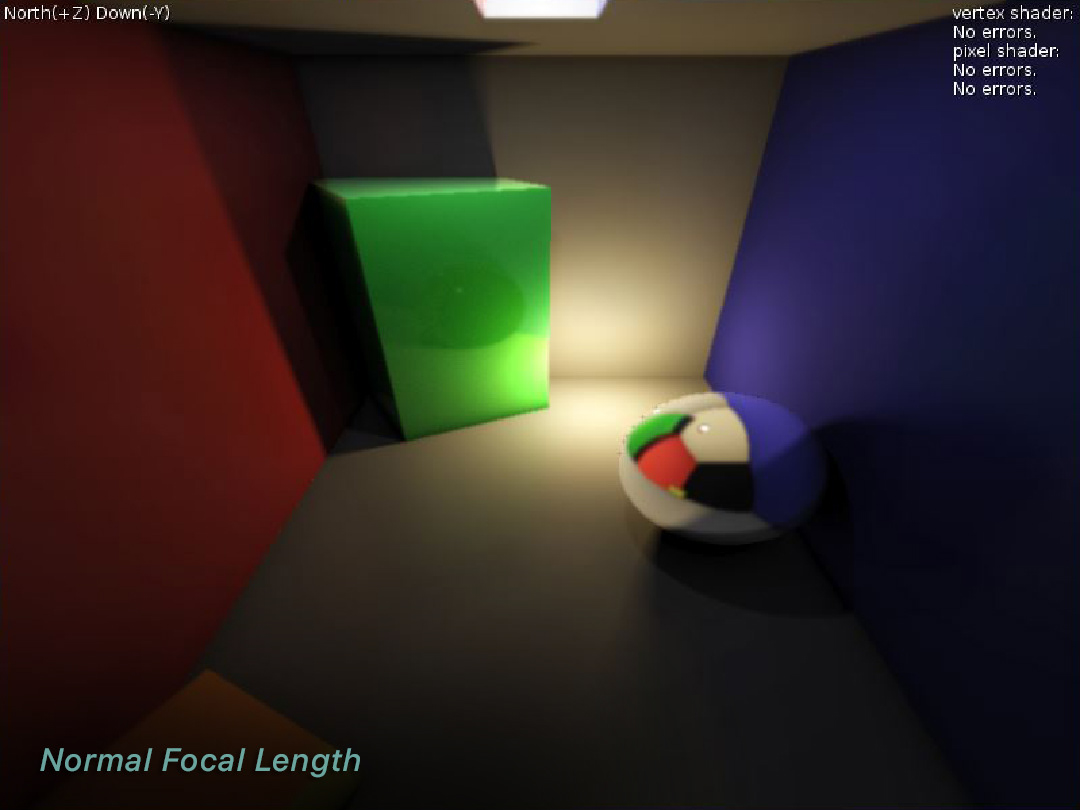

Camera Options (Focal Length & FOV)

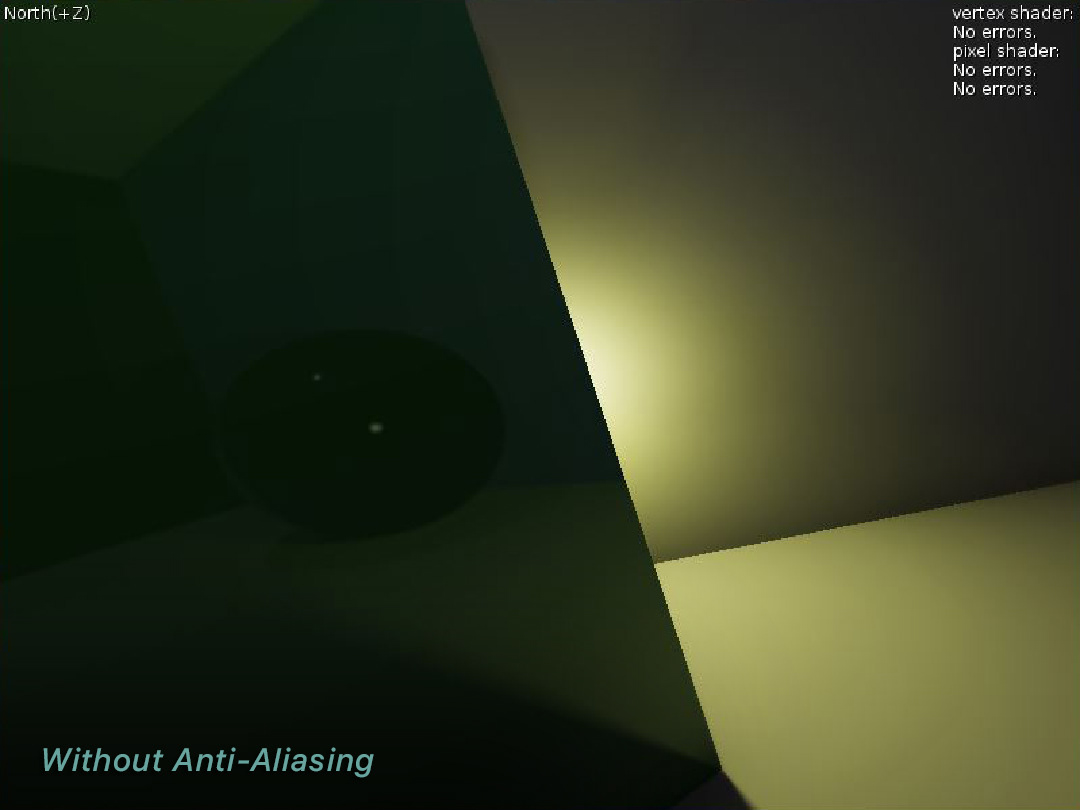

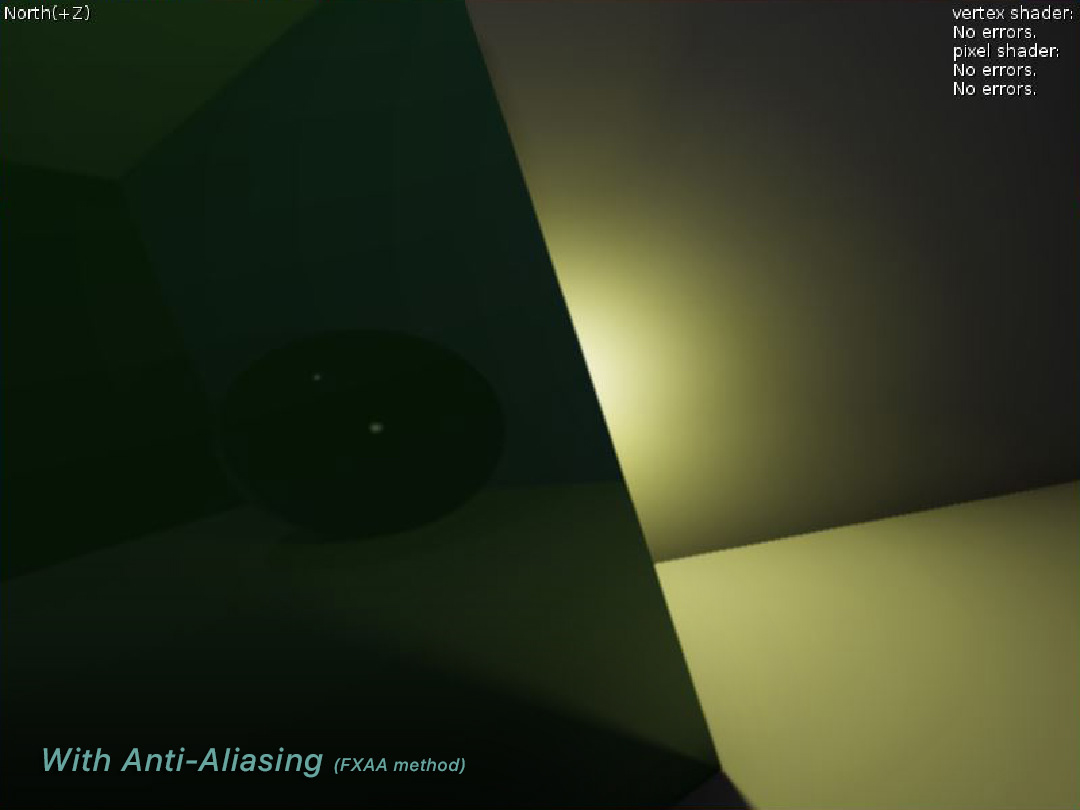

Anti-Aliasing (FXAA)

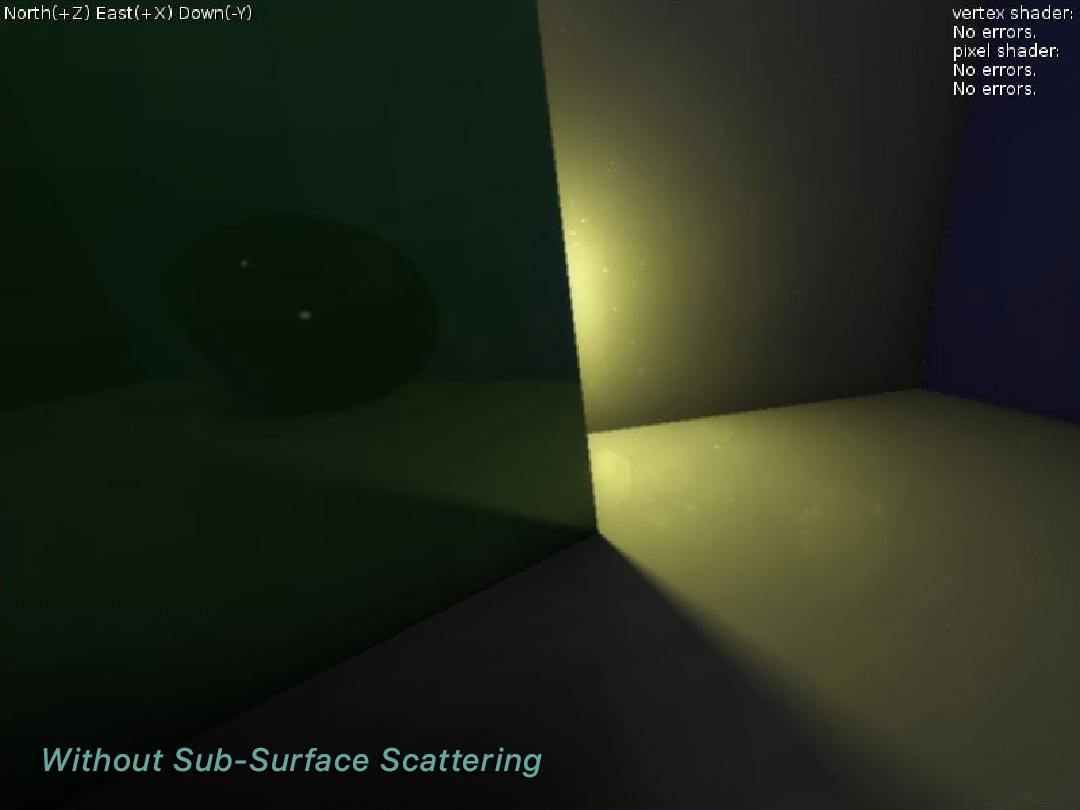

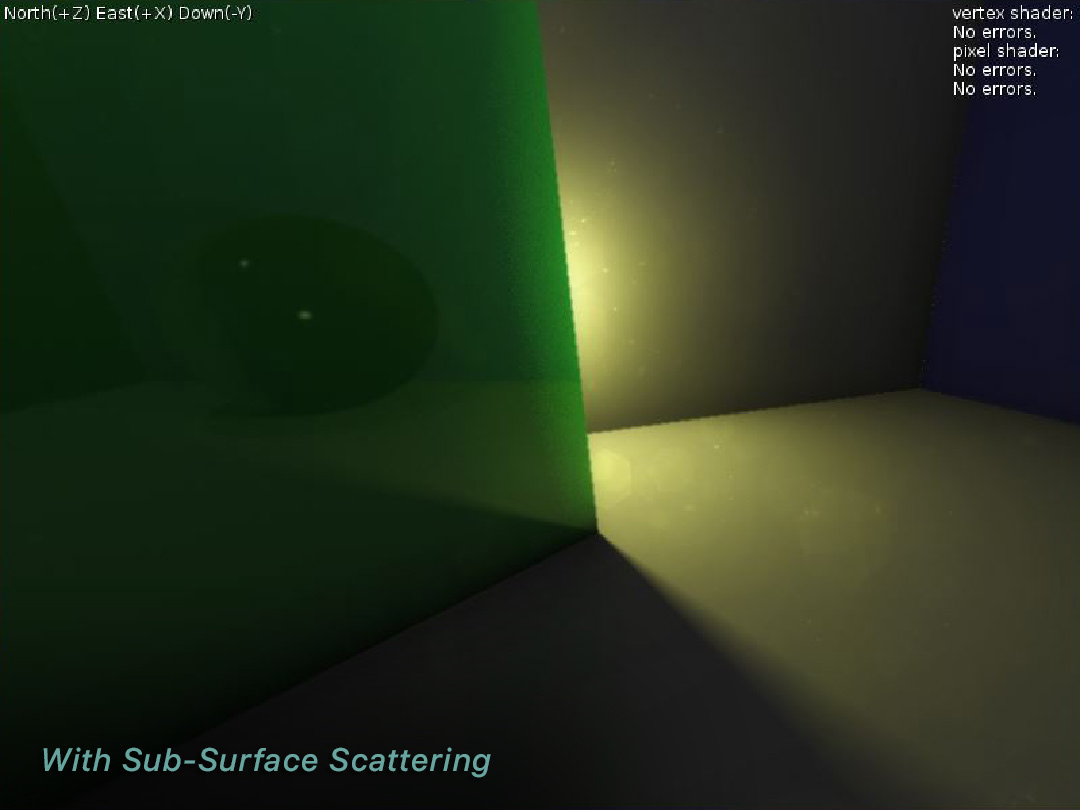

Sub-Surface Scattering

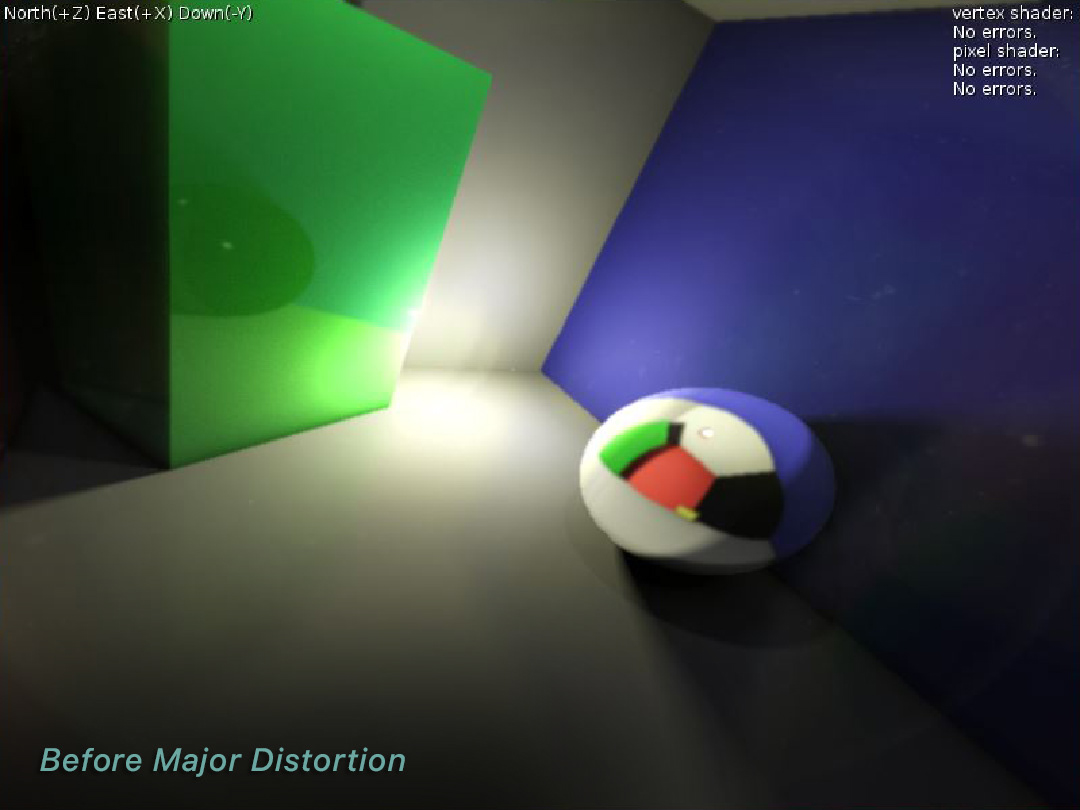

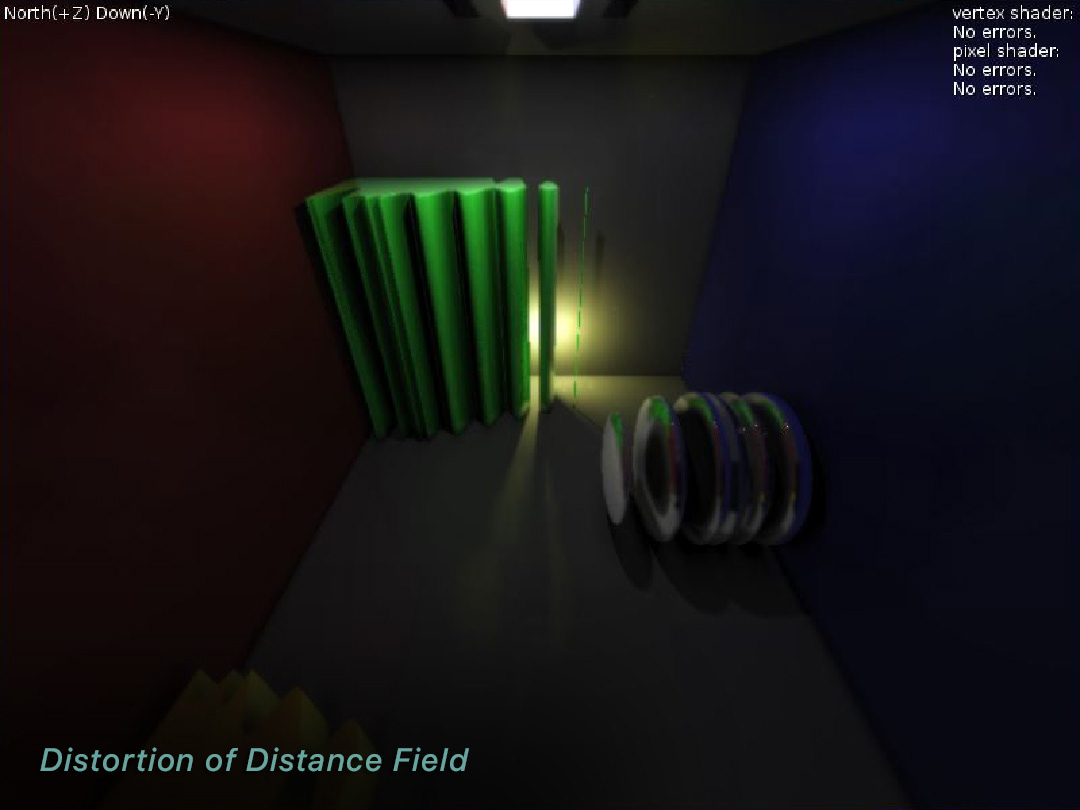

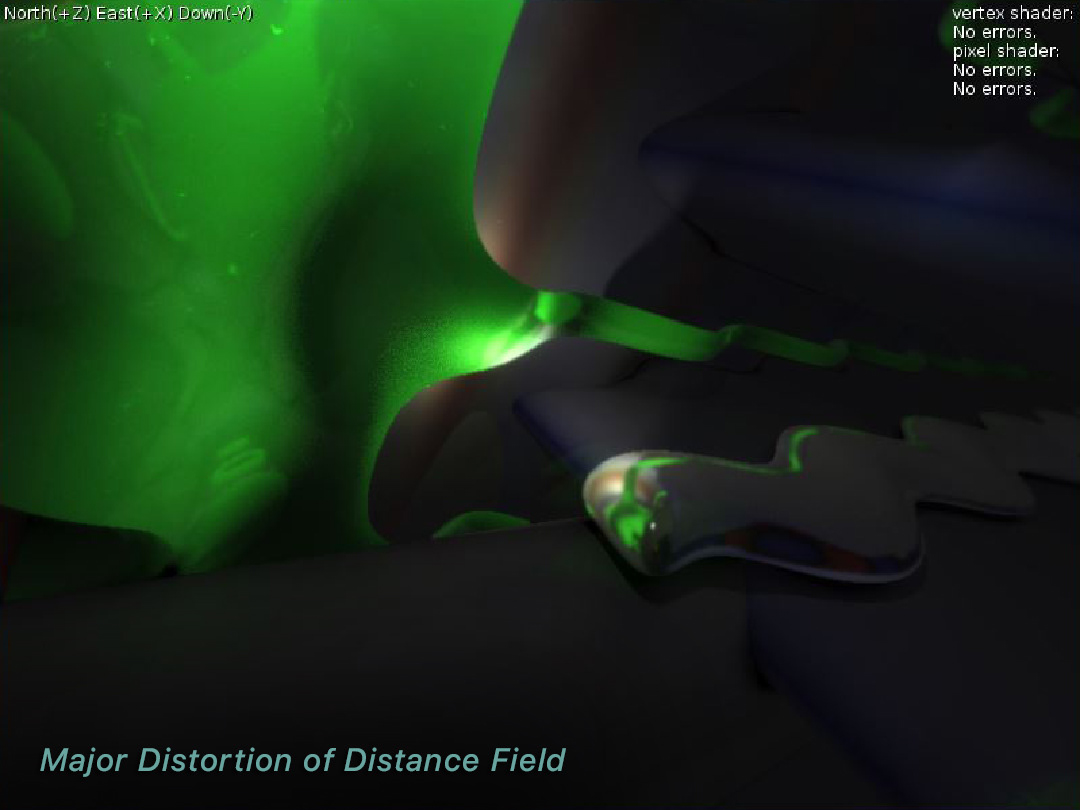

Signed-Distance Field Manipulation

Jakob Plotts | Artist • UX Designer • Programmer | 2019